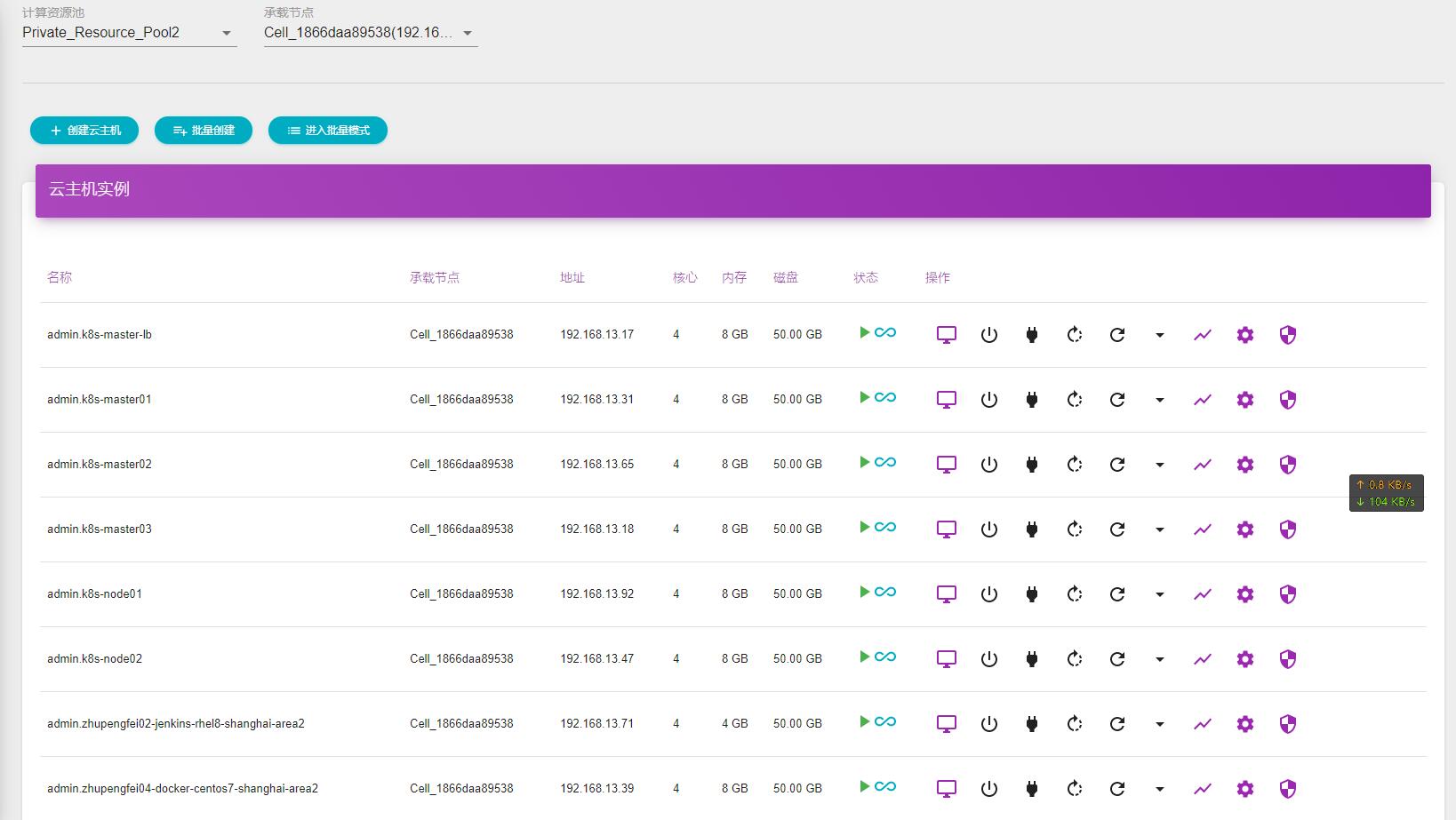

k8s 1.20.x 使用Kubeadm部署工具部署,高可用采用HAProxy + KeepAlive。

节点规划

| 主机名 | IP | 角色 | 配置 |

|---|---|---|---|

| k8s-master01 | 192.168.13.31 | Master / Worker节点 | 4CPU 8G 50G |

| k8s-master02 | 192.168.13.65 | Master / Worker节点 | 4CPU 8G 50G |

| k8s-master03 | 192.168.13.18 | Master / Worker节点 | 4CPU 8G 50G |

| k8s-node01 | 192.168.13.92 | Worker 节点 | 4CPU 8G 50G |

| k8s-node02 | 192.168.13.47 | Worker 节点 | 4CPU 8G 50G |

| k8s-master-lb | 192.168.13.17 | VIP | 4CPU 8G 50G |

版本信息

| 信息 | 备注 |

|---|---|

| 系统版本 | CentOS Linux release 7.9.2009 (Core) |

| Docker版本 | 20.10.5 |

| k8s版本 | 1.20.4 |

| Pod网段 | 172.168.0.0/16 |

| Service网段 | 10.96.0.0/12 |

基本配置

[root@zhupengfei03-k8s-master01-centos7-shanghai-area2 ~]# cat /etc/hosts # 所有节点配置hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.13.31 k8s-master01

192.168.13.65 k8s-master02

192.168.13.18 k8s-master03

192.168.13.17 k8s-master-lb # 如果不是高可用集群,该IP为Master01的IP

192.168.13.92 k8s-node01

192.168.13.47 k8s-node02

[root@k8s-master01 ~]# swapoff -a && sysctl -w vm.swappiness=0

vm.swappiness = 0

[root@k8s-master01 ~]# sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab # 所有节点关闭swap分区

[root@k8s-master01 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2523 100 2523 0 0 10713 0 --:--:-- --:--:-- --:--:-- 10736

[root@k8s-master01 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@k8s-master01 yum.repos.d]# yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y

[root@k8s-master01 ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Loaded plugins: fastestmirror

adding repo from: https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

grabbing file https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo to /etc/yum.repos.d/docker-ce.repo

repo saved to /etc/yum.repos.d/docker-ce.repo

[root@k8s-master01 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

> enabled=1

> gpgcheck=1

> repo_gpgcheck=1

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

[root@k8s-master01 ~]# sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

[root@k8s-master01 ~]# yum clean all

Loaded plugins: fastestmirror

Cleaning repos: base docker-ce-stable epel extras kubernetes updates zabbix zabbix-non-supported

Cleaning up list of fastest mirrors

[root@k8s-master01 ~]# yum makecache

Loaded plugins: fastestmirror

Determining fastest mirrors

epel/x86_64/metalink | 4.4 kB 00:00:00

* epel: mirrors.ustc.edu.cn

base | 3.6 kB 00:00:00

docker-ce-stable | 3.5 kB 00:00:00

epel | 4.7 kB 00:00:00

extras | 2.9 kB 00:00:00

kubernetes/signature | 844 B 00:00:00

Retrieving key from https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

Retrieving key from https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

kubernetes/signature | 1.4 kB 00:00:00 !!!

https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/repodata/repomd.xml: [Errno -1] repomd.xml signature could not be verified for kubernetes

Trying other mirror.

One of the configured repositories failed (Kubernetes),

and yum doesn't have enough cached data to continue. At this point the only

safe thing yum can do is fail. There are a few ways to work "fix" this:

1. Contact the upstream for the repository and get them to fix the problem.

2. Reconfigure the baseurl/etc. for the repository, to point to a working

upstream. This is most often useful if you are using a newer

distribution release than is supported by the repository (and the

packages for the previous distribution release still work).

3. Run the command with the repository temporarily disabled

yum --disablerepo=kubernetes ...

4. Disable the repository permanently, so yum won't use it by default. Yum

will then just ignore the repository until you permanently enable it

again or use --enablerepo for temporary usage:

yum-config-manager --disable kubernetes

or

subscription-manager repos --disable=kubernetes

5. Configure the failing repository to be skipped, if it is unavailable.

Note that yum will try to contact the repo. when it runs most commands,

so will have to try and fail each time (and thus. yum will be be much

slower). If it is a very temporary problem though, this is often a nice

compromise:

yum-config-manager --save --setopt=kubernetes.skip_if_unavailable=true

failure: repodata/repomd.xml from kubernetes: [Errno 256] No more mirrors to try.

https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/repodata/repomd.xml: [Errno -1] repomd.xml signature could not be verified for kubernetes # 上游阿里源不能访问,以这个时间,按照过往经验,初步判断可能是上游在升级同步

(2021-03-11,时间挺晚了,洗漱休息,改天继续)

[root@k8s-master01 ~]# ulimit -SHn 65535

[root@k8s-master01 ~]# vim /etc/security/limits.conf # 所有节点配置limit

# 末尾添加如下内容

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

[root@k8s-master01 ~]# ssh-keygen -t rsa

[root@k8s-master01 ~]# for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 k8s-node02;do ssh-copy-id -i .ssh/id_rsa.pub $i;done # Master01节点免密钥登录其他节点

[root@k8s-master01 ~]# cd /root/ && rz 源码文件

[root@k8s-master01 ~]# yum update -y && reboot # 所有节点升级系统并重启

[root@k8s-master01 ~]# yum install ipvsadm ipset sysstat conntrack libseccomp -y # 内核配置,所有节点安装ipvsadm

[root@k8s-master01 ~]# vim ipvs.sh # 加载ipvs

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_sh

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- nf_conntrack_ipv4

[root@k8s-master01 ~]# chmod +x ipvs.sh

[root@k8s-master01 ~]# sh ipvs.sh

[root@k8s-master01 ~]# lsmod |grep ip_vs

ip_vs_ftp 13079 0

nf_nat 26583 1 ip_vs_ftp

ip_vs_sed 12519 0

ip_vs_nq 12516 0

ip_vs_sh 12688 0

ip_vs_dh 12688 0

ip_vs_lblcr 12922 0

ip_vs_lblc 12819 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs_wlc 12519 0

ip_vs_lc 12516 0

ip_vs 145458 22 ip_vs_dh,ip_vs_lc,ip_vs_nq,ip_vs_rr,ip_vs_sh,ip_vs_ftp,ip_vs_sed,ip_vs_wlc,ip_vs_wrr,ip_vs_lblcr,ip_vs_lblc

nf_conntrack 139264 3 ip_vs,nf_nat,nf_conntrack_ipv4

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

[root@k8s-master01 ~]# echo "/root/kubernetes/ipvs/ipvs.sh" >>/etc/rc.local

[root@k8s-master01 ~]# chmod +x /etc/rc.local

[root@k8s-master01 ~]# systemctl enable --now systemd-modules-load.service # 所有节点安装ipvsadm

[root@k8s-master01 ~]# systemctl enable --now systemd-modules-load.service # 加载内核配置

[root@k8s-master01 ~]# cat <<EOF > /etc/sysctl.d/k8s.conf

> net.ipv4.ip_forward = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.bridge.bridge-nf-call-ip6tables = 1

> fs.may_detach_mounts = 1

> vm.overcommit_memory=1

> vm.panic_on_oom=0

> fs.inotify.max_user_watches=89100

> fs.file-max=52706963

> fs.nr_open=52706963

> net.netfilter.nf_conntrack_max=2310720

>

> net.ipv4.tcp_keepalive_time = 600

> net.ipv4.tcp_keepalive_probes = 3

> net.ipv4.tcp_keepalive_intvl =15

> net.ipv4.tcp_max_tw_buckets = 36000

> net.ipv4.tcp_tw_reuse = 1

> net.ipv4.tcp_max_orphans = 327680

> net.ipv4.tcp_orphan_retries = 3

> net.ipv4.tcp_syncookies = 1

> net.ipv4.tcp_max_syn_backlog = 16384

> net.ipv4.ip_conntrack_max = 65536

> net.ipv4.tcp_max_syn_backlog = 16384

> net.ipv4.tcp_timestamps = 0

> net.core.somaxconn = 16384

> EOF

[root@k8s-master01 ~]# sysctl --system # 开启一些k8s集群中必须的内核参数,所有节点配置k8s内核

* Applying /usr/lib/sysctl.d/00-system.conf ...

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

kernel.kptr_restrict = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

net.ipv4.ip_forward = 1

fs.may_detach_mounts = 1

vm.overcommit_memory = 1

vm.panic_on_oom = 0

fs.inotify.max_user_watches = 89100

fs.file-max = 52706963

fs.nr_open = 52706963

net.netfilter.nf_conntrack_max = 2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

* Applying /etc/sysctl.conf ...

[root@k8s-master01 ~]# yum install docker-ce-19.03.* -y # 所有节点安装Docker-ce 19.03

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl enable --now docker # 所有节点设置开机自启动Docker

[root@k8s-master01 ~]# yum list kubeadm.x86_64 --showduplicates | sort -r # 查看k8s已发布版本

[root@k8s-master01 ~]# yum install kubeadm -y # 所有节点安装最新版本kubeadm

[root@k8s-master01 ~]#cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.2"

EOF # 默认配置的pause镜像使用gcr.io仓库,配置Kubelet使用阿里云的pause镜像

[root@k8s-master01 ~]# yum install keepalived haproxy -y # 所有Master节点通过yum安装HAProxy和KeepAlived

[root@k8s-master01 ~]# cp /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.bak

[root@k8s-master01 ~]# vim /etc/haproxy/haproxy.cfg # 所有Master节点配置HAProxy(详细配置参考HAProxy文档,所有Master节点的HAProxy配置相同)

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 192.168.13.44:6443 check

server k8s-master02 192.168.13.52:6443 check

server k8s-master03 192.168.13.82:6443 check

[root@k8s-master01 ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak # 所有Master节点配置KeepAlived,配置不一样,注意每个节点的IP和网卡(interface参数)

##Master01节点的配置

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface eth0

mcast_src_ip 192.168.13.31

virtual_router_id 51

priority 101

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.13.17

}

# track_script {

# chk_apiserver

# }

}

##Master02节点的配置

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

mcast_src_ip 192.168.13.65

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.13.17

}

# track_script {

# chk_apiserver

# }

}

##Master03节点的配置

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

mcast_src_ip 192.168.13.18

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.13.17

}

# track_script {

# chk_apiserver

# }

}

[root@k8s-master01 ~]# cat /etc/keepalived/check_apiserver.sh # 配置KeepAlived健康检查文件

#!/bin/bash

err=0

for k in $(seq 1 3)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

[root@k8s-master01 ~]# chmod +x /etc/keepalived/check_apiserver.sh

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl enable --now haproxy # 启动haproxy和keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/haproxy.service to /usr/lib/systemd/system/haproxy.service.

[root@k8s-master01 ~]# systemctl enable --now keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

集群初始化

[root@k8s-master01 ~]# vim new_k8s.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: 7t2weq.bjbawausm0jaxury

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.13.31

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

certSANs:

- 192.168.13.17

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.13.17:16443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

networking:

dnsDomain: cluster.local

podSubnet: 172.168.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

[root@k8s-master01 ~]# kubeadm config images pull --config /root/new_k8s.yaml # Master节点下载镜像

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.20.0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.20.0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.20.0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.20.0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0

[root@k8s-master01 ~]# systemctl enable --now kubelet # 所有节点设置开机自启动kubelet

[root@k8s-master01 ~]# kubeadm init --config /root/new_k8s.yaml --upload-certs # Master01节点初始化后会在/etc/kubernetes目录下生成对应的证书和配置文件,之后其他Master节点加入Master01即可

初始化成功以后,会产生Token值,用于其他节点加入时使用,因此要记录下初始化成功生成的token值(令牌值):

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.13.17:16443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:4f2bf739023f90b689c821491506363c8f8c6669cfd7067885f1a7e9e110f1f6 \

--control-plane --certificate-key af885c32f3332dabb38d9173d598ce62e99bb2d11cb23a00119b73eefd6cff34

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.13.17:16443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:4f2bf739023f90b689c821491506363c8f8c6669cfd7067885f1a7e9e110f1f6

[root@k8s-master01 ~]# cat <<EOF >> /root/.bashrc

export KUBECONFIG=/etc/kubernetes/admin.conf

EOF

[root@k8s-master01 ~]# source /root/.bashrc

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane,master 71s v1.20.4

[root@k8s-master01 ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-54d67798b7-d2wnb 0/1 Pending 0 86s <none> <none> <none> <none>

coredns-54d67798b7-qgmhk 0/1 Pending 0 86s <none> <none> <none> <none>

etcd-k8s-master01 1/1 Running 0 88s 192.168.13.31 k8s-master01 <none> <none>

kube-apiserver-k8s-master01 1/1 Running 1 88s 192.168.13.31 k8s-master01 <none> <none>

kube-controller-manager-k8s-master01 1/1 Running 0 88s 192.168.13.31 k8s-master01 <none> <none>

kube-proxy-pzbqq 1/1 Running 0 87s 192.168.13.31 k8s-master01 <none> <none>

kube-scheduler-k8s-master01 1/1 Running 0 88s 192.168.13.31 k8s-master01 <none> <none>

高可用Master

[root@k8s-master01 ~]# docker info | grep -i cgroup

Cgroup Driver: systemd # 前提条件

初始化其他master加入集群

[root@k8s-master02 ~]# cat <<EOF> /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

[root@k8s-master02 ~]# systemctl restart docker

踩坑点1:首次部署集群发现有问题,没有开启keepalive健康状态,在重新开启后需要重新部署集群。

[root@k8s-master01 ~]# kubectl get nodes

[root@k8s-master01 ~]# kubectl delete node k8s-master01

[root@k8s-master01 ~]# rm -rf /etc/kubernetes/*

[root@k8s-master01 ~]# kubeadm reset

[root@k8s-master01 ~]# rm -rf ~/.kube/*

[root@k8s-master01 ~]# rm -rf /var/lib/etcd/*

[root@k8s-master01 ~]# kubeadm reset -f踩坑点2:首次加入Master失败,kubeadm join 超时报错 error execution phase kubelet-start: error uploading crisocket: timed out waiting for the condition

[root@k8s-master02 ~]# kubeadm join 192.168.13.17:16443 --token 7t2weq.bjbawausm0jaxury \

> --discovery-token-ca-cert-hash sha256:4f2bf739023f90b689c821491506363c8f8c6669cfd7067885f1a7e9e110f1f6 \

> --control-plane --certificate-key af885c32f3332dabb38d9173d598ce62e99bb2d11cb23a00119b73eefd6cff34

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master02 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.13.65 192.168.13.17]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master02 localhost] and IPs [192.168.13.65 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master02 localhost] and IPs [192.168.13.65 127.0.0.1 ::1]

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[kubelet-check] Initial timeout of 40s passed.

error execution phase kubelet-start: error uploading crisocket: timed out waiting for the condition

To see the stack trace of this error execute with --v=5 or higher解决办法:

[root@k8s-master02 ~]# kubeadm reset

[root@k8s-master02 ~]# systemctl daemon-reload

[root@k8s-master02 ~]# systemctl restart kubelet

[root@k8s-master02 ~]# iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X [root@k8s-master02 ~]# kubeadm join 192.168.13.17:16443 --token 7t2weq.bjbawausm0jaxury --discovery-token-ca-cert-hash sha256:4f2bf739023f90b689c821491506363c8f8c6669cfd7067885f1a7e9e110f1f6 --control-plane --certificate-key af885c32f3332dabb38d9173d598ce62e99bb2d11cb23a00119b73eefd6cff34 # 重新加入节点

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master02 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.13.65 192.168.13.17]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master02 localhost] and IPs [192.168.13.65 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master02 localhost] and IPs [192.168.13.65 127.0.0.1 ::1]

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node k8s-master02 as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node k8s-master02 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

[root@k8s-master02 ~]#

Master01 : kubectl get nodes

[root@k8s-master01 ~]# kubectl get nodes # 查看节点是否加入

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane,master 5m52s v1.20.4

k8s-master02 NotReady control-plane,master 54s v1.20.4[root@k8s-master03 manifests]# kubeadm join 192.168.13.17:16443 --token 7t2weq.bjbawausm0jaxury \

> --discovery-token-ca-cert-hash sha256:4f2bf739023f90b689c821491506363c8f8c6669cfd7067885f1a7e9e110f1f6 \

> --control-plane --certificate-key af885c32f3332dabb38d9173d598ce62e99bb2d11cb23a00119b73eefd6cff34 # 将Master03加入Master01

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master03 localhost] and IPs [192.168.13.18 127.0.0.1 ::1]

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master03 localhost] and IPs [192.168.13.18 127.0.0.1 ::1]

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master03 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.13.18 192.168.13.17]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node k8s-master03 as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node k8s-master03 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

[root@k8s-master03 manifests]# 添加Node节点

[root@k8s-node01 ~]# kubeadm join 192.168.13.17:16443 --token 7t2weq.bjbawausm0jaxury --discovery-token-ca-cert-hash sha256:4f2bf739023f90b689c821491506363c8f8c6669cfd7067885f1a7e9e110f1f6

[preflight] Running pre-flight checks

error execution phase preflight: couldn't validate the identity of the API Server: Get "https://192.168.13.17:16443/api/v1/namespaces/kube-public/configmaps/cluster-info?timeout=10s": dial tcp 192.168.13.17:16443: connect: connection refused

To see the stack trace of this error execute with --v=5 or higher # node1 加入失败,看似是token过期导致,kubeadm token create # 得到token和discovery-token-ca-cert-hash && openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' # 重新加入即可,但是没有成功,这里暂且放一下,回头踩平这个坑点

[root@k8s-node02 ~]# kubeadm join 192.168.13.17:16443 --token 7t2weq.bjbawausm0jaxury \

> --discovery-token-ca-cert-hash sha256:4f2bf739023f90b689c821491506363c8f8c6669cfd7067885f1a7e9e110f1f6

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

Metrics Server部署

新版Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存、磁盘、CPU和网络的使用率,将Master01节点的front-proxy-ca.crt复制到所有Node节点。

[root@k8s-master01 ~]# scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node02:/etc/kubernetes/pki/front-proxy-ca.crt

front-proxy-ca.crt 100% 1078 812.4KB/s 00:00

[root@k8s-master01 ~]# cd /root/k8s-ha-install/metrics-server-0.4.x-kubeadm/

[root@k8s-master01 metrics-server-0.4.x-kubeadm]# kubectl create -f comp.yaml # 安装metrics server

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

Dashboard部署

[root@k8s-master01 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

--2021-03-13 14:00:39-- https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.109.133, 185.199.108.133, 185.199.110.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.109.133|:443... connected.

HTTP request sent, awaiting response...

200 OK

Length: 7568 (7.4K) [text/plain]

Saving to: ‘recommended.yaml’

100%[======================================================================================================================================================================================>] 7,568 --.-K/s in 0s

2021-03-13 14:00:40 (19.7 MB/s) - ‘recommended.yaml’ saved [7568/7568]

[root@k8s-master01 ~]#

-bash: : command not found

[root@k8s-master01 ~]# sed -i '/namespace/ s/kubernetes-dashboard/kube-system/g' recommended.yaml

[root@k8s-master01 ~]# docker pull kubernetesui/dashboard:v2.0.0-beta8

v2.0.0-beta8: Pulling from kubernetesui/dashboard

5cd0d71945f0: Pull complete

Digest: sha256:fc90baec4fb62b809051a3227e71266c0427240685139bbd5673282715924ea7

Status: Downloaded newer image for kubernetesui/dashboard:v2.0.0-beta8

docker.io/kubernetesui/dashboard:v2.0.0-beta8

[root@k8s-master01 ~]# docker pull kubernetesui/metrics-scraper:v1.0.1

v1.0.1: Pulling from kubernetesui/metrics-scraper

4689bc3c8a60: Pull complete

d6f7da934d73: Pull complete

ee60d0f2a8a1: Pull complete

Digest: sha256:35fcae4fd9232a541a8cb08f2853117ba7231750b75c2cb3b6a58a2aaa57f878

Status: Downloaded newer image for kubernetesui/metrics-scraper:v1.0.1

docker.io/kubernetesui/metrics-scraper:v1.0.1

[root@k8s-master01 ~]# vim recommended.yaml # NodePort方式:为了便于本地访问,修改yaml文件,将service改为NodePort 类型:

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 31260

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kube-system

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kube-system

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kube-system

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-beta8

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kube-system

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kube-system

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.1

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}[root@k8s-master01 ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard unchanged

service/kubernetes-dashboard configured

secret/kubernetes-dashboard-certs unchanged

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@k8s-master01 ~]# kubectl get pod --namespace=kube-system -o wide | grep dashboard # 查看Pod 的状态为running说明dashboard已经部署成功

dashboard-metrics-scraper-7445d59dfd-cbklv 1/1 Running 0 55s <none> <none> <none> <none>

kubernetes-dashboard-6f85cc8978-djlvp 1/1 Running 0 55s <none> <none> <none> <none>

[root@k8s-master01 ~]# kubectl get deployment kubernetes-dashboard --namespace=kube-system # Dashboard 会在 kube-system namespace 中创建自己的 Deployment 和 Service

NAME READY UP-TO-DATE AVAILABLE AGE

kubernetes-dashboard 0/1 1 0 70s

[root@k8s-master01 ~]# kubectl get service kubernetes-dashboard --namespace=kube-system # 查看service,TYPE类型已经变为NodePort,端口为31620

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.103.35.30 <none> 443:31260/TCP 10m

[root@k8s-master01 ~]# kubectl get service -n kube-system | grep dashboard

dashboard-metrics-scraper ClusterIP 10.101.164.199 <none> 8000/TCP 108s

kubernetes-dashboard NodePort 10.103.35.30 <none> 443:31260/TCP 10m

[root@k8s-master01 ~]# kubectl get pods -n kube-system -o wide # 查看dashboard运行在那台机器上面