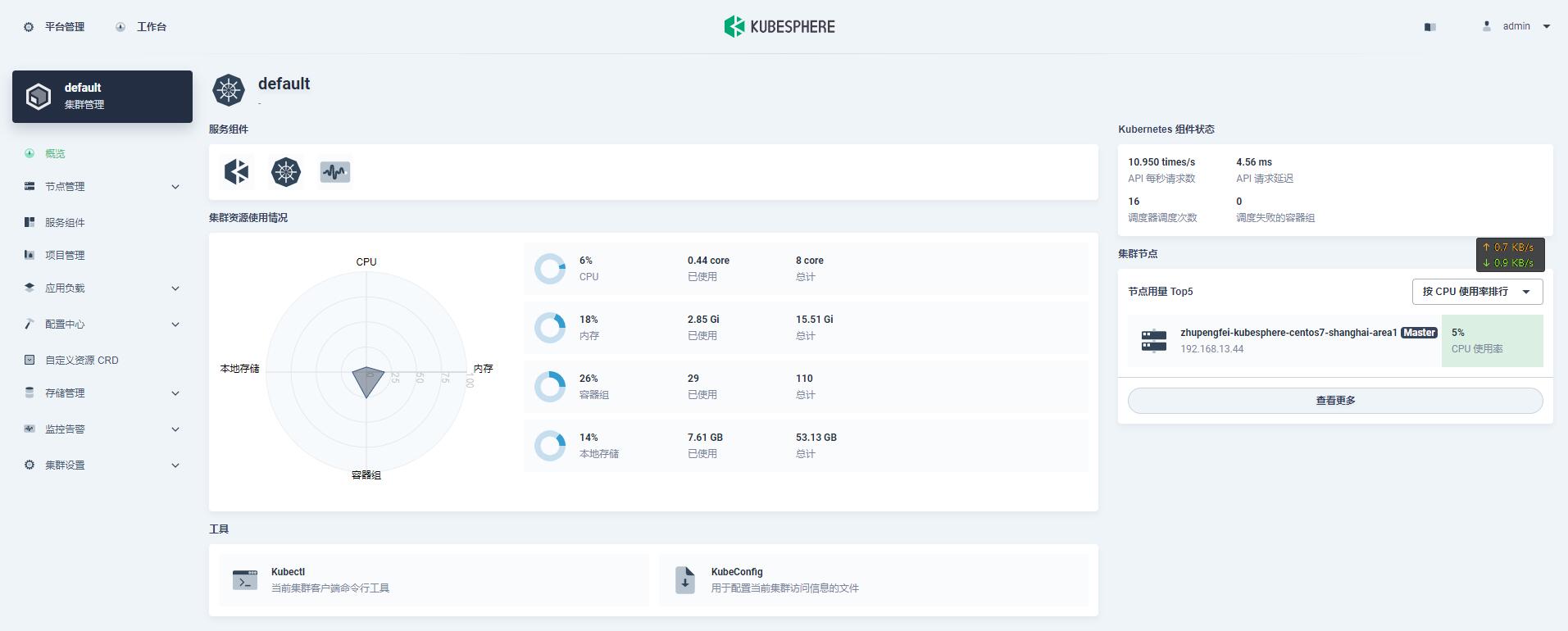

部署 KubeSphere All-in-One 作测试环境使用,生产环境即加入Master节点进行管理。

[root@zhupengfei-kubesphere-centos7-shanghai-area1 ~]# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 8

On-line CPU(s) list: 0-7

Thread(s) per core: 2

Core(s) per socket: 4

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 6

Model name: QEMU Virtual CPU version 2.0.0

Stepping: 3

CPU MHz: 2599.998

BogoMIPS: 5199.99

Hypervisor vendor: KVM

Virtualization type: full

L1d cache: 32K

L1i cache: 32K

L2 cache: 4096K

NUMA node0 CPU(s): 0-7

Flags: fpu de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pse36 clflush mmx fxsr sse sse2 ht syscall nx lm rep_good nopl eagerfpu pni cx16 x2apic popcnt hypervisor lahf_lm

[root@zhupengfei-kubesphere-centos7-shanghai-area1 ~]# free -mh

total used free shared buff/cache available

Mem: 15G 1G 11.7G 15M 1G 12G

Swap: 0B 0B 0B

[root@zhupengfei-kubesphere-centos7-shanghai-area1 packages]# curl -sfL https://get-kk.kubesphere.io | VERSION=v1.0.1 sh - # 下载 KubeKey

[root@zhupengfei-kubesphere-centos7-shanghai-area1 packages]# ./kk create cluster --with-kubernetes v1.17.9 --with-kubesphere v3.0.0 # 同时部署 Kubernetes 和 KubeSphere

+----------------------------------------------+------+------+---------+----------+-------+-------+-----------+--------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | conntrack | docker | nfs client | ceph client | glusterfs client | time |

+----------------------------------------------+------+------+---------+----------+-------+-------+-----------+--------+------------+-------------+------------------+--------------+

| zhupengfei-kubesphere-centos7-shanghai-area1 | y | y | y | y | y | y | y | | y | | | CST 14:20:28 |

+----------------------------------------------+------+------+---------+----------+-------+-------+-----------+--------+------------+-------------+------------------+--------------+

This is a simple check of your environment.

Before installation, you should ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendations

Continue this installation? [yes/no]: yes

INFO[14:20:30 CST] Downloading Installation Files

INFO[14:20:30 CST] Downloading kubeadm ...

INFO[14:20:30 CST] Downloading kubelet ...

INFO[14:20:31 CST] Downloading kubectl ...

INFO[14:20:31 CST] Downloading helm ...

INFO[14:20:31 CST] Downloading kubecni ...

INFO[14:20:31 CST] Configurating operating system ...

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

no crontab for root

INFO[14:20:33 CST] Installing docker ...

INFO[14:21:27 CST] Start to download images on all nodes

[zhupengfei-kubesphere-centos7-shanghai-area1] Downloading image: kubesphere/etcd:v3.3.12

[zhupengfei-kubesphere-centos7-shanghai-area1] Downloading image: kubesphere/pause:3.1

[zhupengfei-kubesphere-centos7-shanghai-area1] Downloading image: kubesphere/kube-apiserver:v1.17.9

[zhupengfei-kubesphere-centos7-shanghai-area1] Downloading image: kubesphere/kube-controller-manager:v1.17.9

[zhupengfei-kubesphere-centos7-shanghai-area1] Downloading image: kubesphere/kube-scheduler:v1.17.9

[zhupengfei-kubesphere-centos7-shanghai-area1] Downloading image: kubesphere/kube-proxy:v1.17.9

[zhupengfei-kubesphere-centos7-shanghai-area1] Downloading image: coredns/coredns:1.6.9

[zhupengfei-kubesphere-centos7-shanghai-area1] Downloading image: kubesphere/k8s-dns-node-cache:1.15.12

[zhupengfei-kubesphere-centos7-shanghai-area1] Downloading image: calico/kube-controllers:v3.15.1

[zhupengfei-kubesphere-centos7-shanghai-area1] Downloading image: calico/cni:v3.15.1

[zhupengfei-kubesphere-centos7-shanghai-area1] Downloading image: calico/node:v3.15.1

[zhupengfei-kubesphere-centos7-shanghai-area1] Downloading image: calico/pod2daemon-flexvol:v3.15.1

INFO[14:23:31 CST] Generating etcd certs

INFO[14:23:32 CST] Synchronizing etcd certs

INFO[14:23:32 CST] Creating etcd service

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /etc/systemd/system/etcd.service.

INFO[14:23:43 CST] Starting etcd cluster

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

Configuration file will be created

INFO[14:23:43 CST] Refreshing etcd configuration

Waiting for etcd to start

INFO[14:23:49 CST] Backup etcd data regularly

INFO[14:23:49 CST] Get cluster status

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

Cluster will be created.

INFO[14:23:49 CST] Installing kube binaries

Push /packages/kubekey/v1.17.9/amd64/kubeadm to 192.168.13.44:/tmp/kubekey/kubeadm Done

Push /packages/kubekey/v1.17.9/amd64/kubelet to 192.168.13.44:/tmp/kubekey/kubelet Done

Push /packages/kubekey/v1.17.9/amd64/kubectl to 192.168.13.44:/tmp/kubekey/kubectl Done

Push /packages/kubekey/v1.17.9/amd64/helm to 192.168.13.44:/tmp/kubekey/helm Done

Push /packages/kubekey/v1.17.9/amd64/cni-plugins-linux-amd64-v0.8.6.tgz to 192.168.13.44:/tmp/kubekey/cni-plugins-linux-amd64-v0.8.6.tgz Done

INFO[14:23:55 CST] Initializing kubernetes cluster

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

W0317 14:23:56.144074 5944 defaults.go:186] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

W0317 14:23:56.144253 5944 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0317 14:23:56.144264 5944 validation.go:28] Cannot validate kubelet config - no validator is available

[init] Using Kubernetes version: v1.17.9

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [zhupengfei-kubesphere-centos7-shanghai-area1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local lb.kubesphere.local kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local localhost lb.kubesphere.local zhupengfei-kubesphere-centos7-shanghai-area1 zhupengfei-kubesphere-centos7-shanghai-area1.cluster.local] and IPs [10.233.0.1 192.168.13.44 127.0.0.1 192.168.13.44 10.233.0.1]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] External etcd mode: Skipping etcd/ca certificate authority generation

[certs] External etcd mode: Skipping etcd/server certificate generation

[certs] External etcd mode: Skipping etcd/peer certificate generation

[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[controlplane] Adding extra host path mount "host-time" to "kube-controller-manager"

W0317 14:23:59.642646 5944 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[controlplane] Adding extra host path mount "host-time" to "kube-controller-manager"

W0317 14:23:59.651999 5944 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[controlplane] Adding extra host path mount "host-time" to "kube-controller-manager"

W0317 14:23:59.653076 5944 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 37.511698 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node zhupengfei-kubesphere-centos7-shanghai-area1 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node zhupengfei-kubesphere-centos7-shanghai-area1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 0pihyy.sm7pxmd16lw6p1hq

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join lb.kubesphere.local:6443 --token 0pihyy.sm7pxmd16lw6p1hq \

--discovery-token-ca-cert-hash sha256:5fdd7db96c2039b324ed66bbd66f96203dde7238b484f9e6d9133b9744adab43 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join lb.kubesphere.local:6443 --token 0pihyy.sm7pxmd16lw6p1hq \

--discovery-token-ca-cert-hash sha256:5fdd7db96c2039b324ed66bbd66f96203dde7238b484f9e6d9133b9744adab43

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

node/zhupengfei-kubesphere-centos7-shanghai-area1 untainted

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

node/zhupengfei-kubesphere-centos7-shanghai-area1 labeled

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

service "kube-dns" deleted

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

service/coredns created

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

serviceaccount/nodelocaldns created

daemonset.apps/nodelocaldns created

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

configmap/nodelocaldns created

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

I0317 14:25:02.343163 8092 version.go:251] remote version is much newer: v1.20.4; falling back to: stable-1.17

W0317 14:25:02.990234 8092 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0317 14:25:02.990268 8092 validation.go:28] Cannot validate kubelet config - no validator is available

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

cd230f4006a0a62927fd429b113defef733cd1c4e694fbe394527946b6d0779c

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

secret/kubeadm-certs patched

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

secret/kubeadm-certs patched

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

secret/kubeadm-certs patched

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

W0317 14:25:03.477887 8310 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0317 14:25:03.477954 8310 validation.go:28] Cannot validate kubelet config - no validator is available

kubeadm join lb.kubesphere.local:6443 --token 7f65uq.zwz97ci1a0jrg2ju --discovery-token-ca-cert-hash sha256:5fdd7db96c2039b324ed66bbd66f96203dde7238b484f9e6d9133b9744adab43

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

zhupengfei-kubesphere-centos7-shanghai-area1 NotReady master,worker 28s v1.17.9 192.168.13.44 <none> CentOS Linux 7 (Core) 3.10.0-1160.11.1.el7.x86_64 docker://19.3.8

INFO[14:25:03 CST] Deploying network plugin ...

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

INFO[14:25:05 CST] Joining nodes to cluster

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

storageclass.storage.k8s.io/local created

serviceaccount/openebs-maya-operator created

clusterrole.rbac.authorization.k8s.io/openebs-maya-operator created

clusterrolebinding.rbac.authorization.k8s.io/openebs-maya-operator created

configmap/openebs-ndm-config created

daemonset.apps/openebs-ndm created

deployment.apps/openebs-ndm-operator created

deployment.apps/openebs-localpv-provisioner created

INFO[14:25:05 CST] Deploying KubeSphere ...

v3.0.0

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

namespace/kubesphere-system created

namespace/kubesphere-monitoring-system created

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

secret/kube-etcd-client-certs created

[zhupengfei-kubesphere-centos7-shanghai-area1 192.168.13.44] MSG:

namespace/kubesphere-system unchanged

serviceaccount/ks-installer created

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io created

clusterrole.rbac.authorization.k8s.io/ks-installer created

clusterrolebinding.rbac.authorization.k8s.io/ks-installer created

deployment.apps/ks-installer created

clusterconfiguration.installer.kubesphere.io/ks-installer created

INFO[14:30:46 CST] Installation is complete.

Please check the result using the command:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

[root@zhupengfei-kubesphere-centos7-shanghai-area1 packages]# 验证部署

[root@zhupengfei-kubesphere-centos7-shanghai-area1 packages]# kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

2021-03-17T14:26:30+08:00 INFO : shell-operator latest

2021-03-17T14:26:30+08:00 INFO : Use temporary dir: /tmp/shell-operator

2021-03-17T14:26:30+08:00 INFO : Initialize hooks manager ...

2021-03-17T14:26:30+08:00 INFO : Search and load hooks ...

2021-03-17T14:26:30+08:00 INFO : Load hook config from '/hooks/kubesphere/installRunner.py'

2021-03-17T14:26:30+08:00 INFO : HTTP SERVER Listening on 0.0.0.0:9115

2021-03-17T14:26:31+08:00 INFO : Load hook config from '/hooks/kubesphere/schedule.sh'

2021-03-17T14:26:31+08:00 INFO : Initializing schedule manager ...

2021-03-17T14:26:31+08:00 INFO : KUBE Init Kubernetes client

2021-03-17T14:26:31+08:00 INFO : KUBE-INIT Kubernetes client is configured successfully

2021-03-17T14:26:31+08:00 INFO : MAIN: run main loop

2021-03-17T14:26:31+08:00 INFO : MAIN: add onStartup tasks

2021-03-17T14:26:31+08:00 INFO : QUEUE add all HookRun@OnStartup

2021-03-17T14:26:31+08:00 INFO : Running schedule manager ...

2021-03-17T14:26:31+08:00 INFO : MSTOR Create new metric shell_operator_live_ticks

2021-03-17T14:26:31+08:00 INFO : MSTOR Create new metric shell_operator_tasks_queue_length

2021-03-17T14:26:31+08:00 INFO : GVR for kind 'ClusterConfiguration' is installer.kubesphere.io/v1alpha1, Resource=clusterconfigurations

2021-03-17T14:26:31+08:00 INFO : EVENT Kube event '930b2e98-3b93-4852-926e-88588a687a39'

2021-03-17T14:26:31+08:00 INFO : QUEUE add TASK_HOOK_RUN@KUBE_EVENTS kubesphere/installRunner.py

2021-03-17T14:26:34+08:00 INFO : TASK_RUN HookRun@KUBE_EVENTS kubesphere/installRunner.py

2021-03-17T14:26:34+08:00 INFO : Running hook 'kubesphere/installRunner.py' binding 'KUBE_EVENTS' ...

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that

the implicit localhost does not match 'all'

PLAY [localhost] ***************************************************************

TASK [download : include_tasks] ************************************************

skipping: [localhost]

TASK [download : Download items] ***********************************************

skipping: [localhost]

TASK [download : Sync container] ***********************************************

skipping: [localhost]

TASK [kubesphere-defaults : Configure defaults] ********************************

ok: [localhost] => {

"msg": "Check roles/kubesphere-defaults/defaults/main.yml"

}

TASK [preinstall : check k8s version] ******************************************

changed: [localhost]

TASK [preinstall : init k8s version] *******************************************

ok: [localhost]

TASK [preinstall : Stop if kubernetes version is nonsupport] *******************

ok: [localhost] => {

"changed": false,

"msg": "All assertions passed"

}

TASK [preinstall : check storage class] ****************************************

changed: [localhost]

TASK [preinstall : Stop if StorageClass was not found] *************************

skipping: [localhost]

TASK [preinstall : check default storage class] ********************************

changed: [localhost]

TASK [preinstall : Stop if defaultStorageClass was not found] ******************

ok: [localhost] => {

"changed": false,

"msg": "All assertions passed"

}

TASK [preinstall : Kubesphere | Checking kubesphere component] *****************

changed: [localhost]

TASK [preinstall : Kubesphere | Get kubesphere component version] **************

skipping: [localhost]

TASK [preinstall : Kubesphere | Get kubesphere component version] **************

skipping: [localhost] => (item=ks-openldap)

skipping: [localhost] => (item=ks-redis)

skipping: [localhost] => (item=ks-minio)

skipping: [localhost] => (item=ks-openpitrix)

skipping: [localhost] => (item=elasticsearch-logging)

skipping: [localhost] => (item=elasticsearch-logging-curator)

skipping: [localhost] => (item=istio)

skipping: [localhost] => (item=istio-init)

skipping: [localhost] => (item=jaeger-operator)

skipping: [localhost] => (item=ks-jenkins)

skipping: [localhost] => (item=ks-sonarqube)

skipping: [localhost] => (item=logging-fluentbit-operator)

skipping: [localhost] => (item=uc)

skipping: [localhost] => (item=metrics-server)

PLAY RECAP *********************************************************************

localhost : ok=8 changed=4 unreachable=0 failed=0 skipped=6 rescued=0 ignored=0

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that

the implicit localhost does not match 'all'

PLAY [localhost] ***************************************************************

TASK [download : include_tasks] ************************************************

skipping: [localhost]

TASK [download : Download items] ***********************************************

skipping: [localhost]

TASK [download : Sync container] ***********************************************

skipping: [localhost]

TASK [kubesphere-defaults : Configure defaults] ********************************

ok: [localhost] => {

"msg": "Check roles/kubesphere-defaults/defaults/main.yml"

}

TASK [metrics-server : Metrics-Server | Checking old installation files] *******

ok: [localhost]

TASK [metrics-server : Metrics-Server | deleting old metrics-server] ***********

skipping: [localhost]

TASK [metrics-server : Metrics-Server | deleting old metrics-server files] *****

[DEPRECATION WARNING]: evaluating {'changed': False, 'stat': {'exists': False},

'failed': False} as a bare variable, this behaviour will go away and you might

need to add |bool to the expression in the future. Also see

CONDITIONAL_BARE_VARS configuration toggle.. This feature will be removed in

version 2.12. Deprecation warnings can be disabled by setting

deprecation_warnings=False in ansible.cfg.

ok: [localhost] => (item=metrics-server)

TASK [metrics-server : Metrics-Server | Getting metrics-server installation files] ***

changed: [localhost]

TASK [metrics-server : Metrics-Server | Creating manifests] ********************

changed: [localhost] => (item={'name': 'values', 'file': 'values.yaml', 'type': 'config'})

TASK [metrics-server : Metrics-Server | Check Metrics-Server] ******************

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/helm list metrics-server -n kube-system\n", "delta": "0:00:00.046969", "end": "2021-03-17 14:27:02.225550", "msg": "non-zero return code", "rc": 1, "start": "2021-03-17 14:27:02.178581", "stderr": "Error: \"helm list\" accepts no arguments\n\nUsage: helm list [flags]", "stderr_lines": ["Error: \"helm list\" accepts no arguments", "", "Usage: helm list [flags]"], "stdout": "", "stdout_lines": []}

...ignoring

TASK [metrics-server : Metrics-Server | Installing metrics-server] *************

changed: [localhost]

TASK [metrics-server : Metrics-Server | Installing metrics-server retry] *******

skipping: [localhost]

TASK [metrics-server : Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready] ***

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (60 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (59 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (58 retries left).

FAILED - RETRYING: Metrics-Server | Waitting for v1beta1.metrics.k8s.io ready (57 retries left).

changed: [localhost]

TASK [metrics-server : Metrics-Server | import metrics-server status] **********

changed: [localhost]

PLAY RECAP *********************************************************************

localhost : ok=9 changed=6 unreachable=0 failed=0 skipped=5 rescued=0 ignored=1

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that

the implicit localhost does not match 'all'

PLAY [localhost] ***************************************************************

TASK [download : include_tasks] ************************************************

skipping: [localhost]

TASK [download : Download items] ***********************************************

skipping: [localhost]

TASK [download : Sync container] ***********************************************

skipping: [localhost]

TASK [kubesphere-defaults : Configure defaults] ********************************

ok: [localhost] => {

"msg": "Check roles/kubesphere-defaults/defaults/main.yml"

}

TASK [common : Kubesphere | Check kube-node-lease namespace] *******************

changed: [localhost]

TASK [common : KubeSphere | Get system namespaces] *****************************

ok: [localhost]

TASK [common : set_fact] *******************************************************

ok: [localhost]

TASK [common : debug] **********************************************************

ok: [localhost] => {

"msg": [

"kubesphere-system",

"kubesphere-controls-system",

"kubesphere-monitoring-system",

"kube-node-lease"

]

}

TASK [common : KubeSphere | Create kubesphere namespace] ***********************

changed: [localhost] => (item=kubesphere-system)

changed: [localhost] => (item=kubesphere-controls-system)

changed: [localhost] => (item=kubesphere-monitoring-system)

changed: [localhost] => (item=kube-node-lease)

TASK [common : KubeSphere | Labeling system-workspace] *************************

changed: [localhost] => (item=default)

changed: [localhost] => (item=kube-public)

changed: [localhost] => (item=kube-system)

changed: [localhost] => (item=kubesphere-system)

changed: [localhost] => (item=kubesphere-controls-system)

changed: [localhost] => (item=kubesphere-monitoring-system)

changed: [localhost] => (item=kube-node-lease)

TASK [common : KubeSphere | Create ImagePullSecrets] ***************************

changed: [localhost] => (item=default)

changed: [localhost] => (item=kube-public)

changed: [localhost] => (item=kube-system)

changed: [localhost] => (item=kubesphere-system)

changed: [localhost] => (item=kubesphere-controls-system)

changed: [localhost] => (item=kubesphere-monitoring-system)

changed: [localhost] => (item=kube-node-lease)

TASK [common : Kubesphere | Label namespace for network policy] ****************

changed: [localhost]

TASK [common : KubeSphere | Getting kubernetes master num] *********************

changed: [localhost]

TASK [common : KubeSphere | Setting master num] ********************************

ok: [localhost]

TASK [common : Kubesphere | Getting common component installation files] *******

changed: [localhost] => (item=common)

changed: [localhost] => (item=ks-crds)

TASK [common : KubeSphere | Create KubeSphere crds] ****************************

changed: [localhost]

TASK [common : KubeSphere | Recreate KubeSphere crds] **************************

changed: [localhost]

TASK [common : KubeSphere | check k8s version] *********************************

changed: [localhost]

TASK [common : Kubesphere | Getting common component installation files] *******

changed: [localhost] => (item=snapshot-controller)

TASK [common : Kubesphere | Creating snapshot controller values] ***************

changed: [localhost] => (item={'name': 'custom-values-snapshot-controller', 'file': 'custom-values-snapshot-controller.yaml'})

TASK [common : Kubesphere | Remove old snapshot crd] ***************************

changed: [localhost]

TASK [common : Kubesphere | Deploy snapshot controller] ************************

changed: [localhost]

TASK [common : Kubesphere | Checking openpitrix common component] **************

changed: [localhost]

TASK [common : include_tasks] **************************************************

skipping: [localhost] => (item={'op': 'openpitrix-db', 'ks': 'mysql-pvc'})

skipping: [localhost] => (item={'op': 'openpitrix-etcd', 'ks': 'etcd-pvc'})

TASK [common : Getting PersistentVolumeName (mysql)] ***************************

skipping: [localhost]

TASK [common : Getting PersistentVolumeSize (mysql)] ***************************

skipping: [localhost]

TASK [common : Setting PersistentVolumeName (mysql)] ***************************

skipping: [localhost]

TASK [common : Setting PersistentVolumeSize (mysql)] ***************************

skipping: [localhost]

TASK [common : Getting PersistentVolumeName (etcd)] ****************************

skipping: [localhost]

TASK [common : Getting PersistentVolumeSize (etcd)] ****************************

skipping: [localhost]

TASK [common : Setting PersistentVolumeName (etcd)] ****************************

skipping: [localhost]

TASK [common : Setting PersistentVolumeSize (etcd)] ****************************

skipping: [localhost]

TASK [common : Kubesphere | Check mysql PersistentVolumeClaim] *****************

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/kubectl get pvc -n kubesphere-system mysql-pvc -o jsonpath='{.status.capacity.storage}'\n", "delta": "0:00:00.092005", "end": "2021-03-17 14:28:32.960403", "msg": "non-zero return code", "rc": 1, "start": "2021-03-17 14:28:32.868398", "stderr": "Error from server (NotFound): persistentvolumeclaims \"mysql-pvc\" not found", "stderr_lines": ["Error from server (NotFound): persistentvolumeclaims \"mysql-pvc\" not found"], "stdout": "", "stdout_lines": []}

...ignoring

TASK [common : Kubesphere | Setting mysql db pv size] **************************

skipping: [localhost]

TASK [common : Kubesphere | Check redis PersistentVolumeClaim] *****************

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/kubectl get pvc -n kubesphere-system redis-pvc -o jsonpath='{.status.capacity.storage}'\n", "delta": "0:00:00.078311", "end": "2021-03-17 14:28:33.371235", "msg": "non-zero return code", "rc": 1, "start": "2021-03-17 14:28:33.292924", "stderr": "Error from server (NotFound): persistentvolumeclaims \"redis-pvc\" not found", "stderr_lines": ["Error from server (NotFound): persistentvolumeclaims \"redis-pvc\" not found"], "stdout": "", "stdout_lines": []}

...ignoring

TASK [common : Kubesphere | Setting redis db pv size] **************************

skipping: [localhost]

TASK [common : Kubesphere | Check minio PersistentVolumeClaim] *****************

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/kubectl get pvc -n kubesphere-system minio -o jsonpath='{.status.capacity.storage}'\n", "delta": "0:00:00.083001", "end": "2021-03-17 14:28:33.783767", "msg": "non-zero return code", "rc": 1, "start": "2021-03-17 14:28:33.700766", "stderr": "Error from server (NotFound): persistentvolumeclaims \"minio\" not found", "stderr_lines": ["Error from server (NotFound): persistentvolumeclaims \"minio\" not found"], "stdout": "", "stdout_lines": []}

...ignoring

TASK [common : Kubesphere | Setting minio pv size] *****************************

skipping: [localhost]

TASK [common : Kubesphere | Check openldap PersistentVolumeClaim] **************

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/kubectl get pvc -n kubesphere-system openldap-pvc-openldap-0 -o jsonpath='{.status.capacity.storage}'\n", "delta": "0:00:00.085865", "end": "2021-03-17 14:28:34.205675", "msg": "non-zero return code", "rc": 1, "start": "2021-03-17 14:28:34.119810", "stderr": "Error from server (NotFound): persistentvolumeclaims \"openldap-pvc-openldap-0\" not found", "stderr_lines": ["Error from server (NotFound): persistentvolumeclaims \"openldap-pvc-openldap-0\" not found"], "stdout": "", "stdout_lines": []}

...ignoring

TASK [common : Kubesphere | Setting openldap pv size] **************************

skipping: [localhost]

TASK [common : Kubesphere | Check etcd db PersistentVolumeClaim] ***************

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/kubectl get pvc -n kubesphere-system etcd-pvc -o jsonpath='{.status.capacity.storage}'\n", "delta": "0:00:00.082510", "end": "2021-03-17 14:28:34.610658", "msg": "non-zero return code", "rc": 1, "start": "2021-03-17 14:28:34.528148", "stderr": "Error from server (NotFound): persistentvolumeclaims \"etcd-pvc\" not found", "stderr_lines": ["Error from server (NotFound): persistentvolumeclaims \"etcd-pvc\" not found"], "stdout": "", "stdout_lines": []}

...ignoring

TASK [common : Kubesphere | Setting etcd pv size] ******************************

skipping: [localhost]

TASK [common : Kubesphere | Check redis ha PersistentVolumeClaim] **************

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/kubectl get pvc -n kubesphere-system data-redis-ha-server-0 -o jsonpath='{.status.capacity.storage}'\n", "delta": "0:00:00.085772", "end": "2021-03-17 14:28:35.015016", "msg": "non-zero return code", "rc": 1, "start": "2021-03-17 14:28:34.929244", "stderr": "Error from server (NotFound): persistentvolumeclaims \"data-redis-ha-server-0\" not found", "stderr_lines": ["Error from server (NotFound): persistentvolumeclaims \"data-redis-ha-server-0\" not found"], "stdout": "", "stdout_lines": []}

...ignoring

TASK [common : Kubesphere | Setting redis ha pv size] **************************

skipping: [localhost]

TASK [common : Kubesphere | Check es-master PersistentVolumeClaim] *************

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/kubectl get pvc -n kubesphere-logging-system data-elasticsearch-logging-discovery-0 -o jsonpath='{.status.capacity.storage}'\n", "delta": "0:00:00.087813", "end": "2021-03-17 14:28:35.445058", "msg": "non-zero return code", "rc": 1, "start": "2021-03-17 14:28:35.357245", "stderr": "Error from server (NotFound): namespaces \"kubesphere-logging-system\" not found", "stderr_lines": ["Error from server (NotFound): namespaces \"kubesphere-logging-system\" not found"], "stdout": "", "stdout_lines": []}

...ignoring

TASK [common : Kubesphere | Setting es master pv size] *************************

skipping: [localhost]

TASK [common : Kubesphere | Check es data PersistentVolumeClaim] ***************

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/kubectl get pvc -n kubesphere-logging-system data-elasticsearch-logging-data-0 -o jsonpath='{.status.capacity.storage}'\n", "delta": "0:00:00.101835", "end": "2021-03-17 14:28:35.873008", "msg": "non-zero return code", "rc": 1, "start": "2021-03-17 14:28:35.771173", "stderr": "Error from server (NotFound): namespaces \"kubesphere-logging-system\" not found", "stderr_lines": ["Error from server (NotFound): namespaces \"kubesphere-logging-system\" not found"], "stdout": "", "stdout_lines": []}

...ignoring

TASK [common : Kubesphere | Setting es data pv size] ***************************

skipping: [localhost]

TASK [common : Kubesphere | Creating common component manifests] ***************

changed: [localhost] => (item={'path': 'etcd', 'file': 'etcd.yaml'})

changed: [localhost] => (item={'name': 'mysql', 'file': 'mysql.yaml'})

changed: [localhost] => (item={'path': 'redis', 'file': 'redis.yaml'})

TASK [common : Kubesphere | Creating mysql sercet] *****************************

changed: [localhost]

TASK [common : Kubesphere | Deploying etcd and mysql] **************************

skipping: [localhost] => (item=etcd.yaml)

skipping: [localhost] => (item=mysql.yaml)

TASK [common : Kubesphere | Getting minio installation files] ******************

skipping: [localhost] => (item=minio-ha)

TASK [common : Kubesphere | Creating manifests] ********************************

skipping: [localhost] => (item={'name': 'custom-values-minio', 'file': 'custom-values-minio.yaml'})

TASK [common : Kubesphere | Check minio] ***************************************

skipping: [localhost]

TASK [common : Kubesphere | Deploy minio] **************************************

skipping: [localhost]

TASK [common : debug] **********************************************************

skipping: [localhost]

TASK [common : fail] ***********************************************************

skipping: [localhost]

TASK [common : Kubesphere | create minio config directory] *********************

skipping: [localhost]

TASK [common : Kubesphere | Creating common component manifests] ***************

skipping: [localhost] => (item={'path': '/root/.config/rclone', 'file': 'rclone.conf'})

TASK [common : include_tasks] **************************************************

skipping: [localhost] => (item=helm)

skipping: [localhost] => (item=vmbased)

TASK [common : Kubesphere | import minio status] *******************************

skipping: [localhost]

TASK [common : Kubesphere | Check ha-redis] ************************************

skipping: [localhost]

TASK [common : Kubesphere | Getting redis installation files] ******************

skipping: [localhost] => (item=redis-ha)

TASK [common : Kubesphere | Creating manifests] ********************************

skipping: [localhost] => (item={'name': 'custom-values-redis', 'file': 'custom-values-redis.yaml'})

TASK [common : Kubesphere | Check old redis status] ****************************

skipping: [localhost]

TASK [common : Kubesphere | Delete and backup old redis svc] *******************

skipping: [localhost]

TASK [common : Kubesphere | Deploying redis] ***********************************

skipping: [localhost]

TASK [common : Kubesphere | Getting redis PodIp] *******************************

skipping: [localhost]

TASK [common : Kubesphere | Creating redis migration script] *******************

skipping: [localhost] => (item={'path': '/etc/kubesphere', 'file': 'redisMigrate.py'})

TASK [common : Kubesphere | Check redis-ha status] *****************************

skipping: [localhost]

TASK [common : ks-logging | Migrating redis data] ******************************

skipping: [localhost]

TASK [common : Kubesphere | Disable old redis] *********************************

skipping: [localhost]

TASK [common : Kubesphere | Deploying redis] ***********************************

skipping: [localhost] => (item=redis.yaml)

TASK [common : Kubesphere | import redis status] *******************************

skipping: [localhost]

TASK [common : Kubesphere | Getting openldap installation files] ***************

skipping: [localhost] => (item=openldap-ha)

TASK [common : Kubesphere | Creating manifests] ********************************

skipping: [localhost] => (item={'name': 'custom-values-openldap', 'file': 'custom-values-openldap.yaml'})

TASK [common : Kubesphere | Check old openldap status] *************************

skipping: [localhost]

TASK [common : KubeSphere | Shutdown ks-account] *******************************

skipping: [localhost]

TASK [common : Kubesphere | Delete and backup old openldap svc] ****************

skipping: [localhost]

TASK [common : Kubesphere | Check openldap] ************************************

skipping: [localhost]

TASK [common : Kubesphere | Deploy openldap] ***********************************

skipping: [localhost]

TASK [common : Kubesphere | Load old openldap data] ****************************

skipping: [localhost]

TASK [common : Kubesphere | Check openldap-ha status] **************************

skipping: [localhost]

TASK [common : Kubesphere | Get openldap-ha pod list] **************************

skipping: [localhost]

TASK [common : Kubesphere | Get old openldap data] *****************************

skipping: [localhost]

TASK [common : Kubesphere | Migrating openldap data] ***************************

skipping: [localhost]

TASK [common : Kubesphere | Disable old openldap] ******************************

skipping: [localhost]

TASK [common : Kubesphere | Restart openldap] **********************************

skipping: [localhost]

TASK [common : KubeSphere | Restarting ks-account] *****************************

skipping: [localhost]

TASK [common : Kubesphere | import openldap status] ****************************

skipping: [localhost]

TASK [common : Kubesphere | Check ha-redis] ************************************

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/helm list -n kubesphere-system | grep \"ks-redis\"\n", "delta": "0:00:00.073871", "end": "2021-03-17 14:28:40.244147", "msg": "non-zero return code", "rc": 1, "start": "2021-03-17 14:28:40.170276", "stderr": "", "stderr_lines": [], "stdout": "", "stdout_lines": []}

...ignoring

TASK [common : Kubesphere | Getting redis installation files] ******************

skipping: [localhost] => (item=redis-ha)

TASK [common : Kubesphere | Creating manifests] ********************************

skipping: [localhost] => (item={'name': 'custom-values-redis', 'file': 'custom-values-redis.yaml'})

TASK [common : Kubesphere | Check old redis status] ****************************

skipping: [localhost]

TASK [common : Kubesphere | Delete and backup old redis svc] *******************

skipping: [localhost]

TASK [common : Kubesphere | Deploying redis] ***********************************

skipping: [localhost]

TASK [common : Kubesphere | Getting redis PodIp] *******************************

skipping: [localhost]

TASK [common : Kubesphere | Creating redis migration script] *******************

skipping: [localhost] => (item={'path': '/etc/kubesphere', 'file': 'redisMigrate.py'})

TASK [common : Kubesphere | Check redis-ha status] *****************************

skipping: [localhost]

TASK [common : ks-logging | Migrating redis data] ******************************

skipping: [localhost]

TASK [common : Kubesphere | Disable old redis] *********************************

skipping: [localhost]

TASK [common : Kubesphere | Deploying redis] ***********************************

changed: [localhost] => (item=redis.yaml)

TASK [common : Kubesphere | import redis status] *******************************

changed: [localhost]

TASK [common : Kubesphere | Getting openldap installation files] ***************

changed: [localhost] => (item=openldap-ha)

TASK [common : Kubesphere | Creating manifests] ********************************

changed: [localhost] => (item={'name': 'custom-values-openldap', 'file': 'custom-values-openldap.yaml'})

TASK [common : Kubesphere | Check old openldap status] *************************

changed: [localhost]

TASK [common : KubeSphere | Shutdown ks-account] *******************************

skipping: [localhost]

TASK [common : Kubesphere | Delete and backup old openldap svc] ****************

skipping: [localhost]

TASK [common : Kubesphere | Check openldap] ************************************

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/helm list -n kubesphere-system | grep \"ks-openldap\"\n", "delta": "0:00:00.077924", "end": "2021-03-17 14:28:47.550034", "msg": "non-zero return code", "rc": 1, "start": "2021-03-17 14:28:47.472110", "stderr": "", "stderr_lines": [], "stdout": "", "stdout_lines": []}

...ignoring

TASK [common : Kubesphere | Deploy openldap] ***********************************

changed: [localhost]

TASK [common : Kubesphere | Load old openldap data] ****************************

skipping: [localhost]

TASK [common : Kubesphere | Check openldap-ha status] **************************

skipping: [localhost]

TASK [common : Kubesphere | Get openldap-ha pod list] **************************

skipping: [localhost]

TASK [common : Kubesphere | Get old openldap data] *****************************

skipping: [localhost]

TASK [common : Kubesphere | Migrating openldap data] ***************************

skipping: [localhost]

TASK [common : Kubesphere | Disable old openldap] ******************************

skipping: [localhost]

TASK [common : Kubesphere | Restart openldap] **********************************

skipping: [localhost]

TASK [common : KubeSphere | Restarting ks-account] *****************************

skipping: [localhost]

TASK [common : Kubesphere | import openldap status] ****************************

changed: [localhost]

TASK [common : Kubesphere | Getting minio installation files] ******************

skipping: [localhost] => (item=minio-ha)

TASK [common : Kubesphere | Creating manifests] ********************************

skipping: [localhost] => (item={'name': 'custom-values-minio', 'file': 'custom-values-minio.yaml'})

TASK [common : Kubesphere | Check minio] ***************************************

skipping: [localhost]

TASK [common : Kubesphere | Deploy minio] **************************************

skipping: [localhost]

TASK [common : debug] **********************************************************

skipping: [localhost]

TASK [common : fail] ***********************************************************

skipping: [localhost]

TASK [common : Kubesphere | create minio config directory] *********************

skipping: [localhost]

TASK [common : Kubesphere | Creating common component manifests] ***************

skipping: [localhost] => (item={'path': '/root/.config/rclone', 'file': 'rclone.conf'})

TASK [common : include_tasks] **************************************************

skipping: [localhost] => (item=helm)

skipping: [localhost] => (item=vmbased)

TASK [common : Kubesphere | import minio status] *******************************

skipping: [localhost]

TASK [common : Kubesphere | Deploying common component] ************************

skipping: [localhost] => (item=mysql.yaml)

TASK [common : Kubesphere | import mysql status] *******************************

skipping: [localhost]

TASK [common : Kubesphere | Deploying common component] ************************

skipping: [localhost] => (item=etcd.yaml)

TASK [common : Kubesphere | Getting elasticsearch and curator installation files] ***

skipping: [localhost]

TASK [common : Kubesphere | Creating custom manifests] *************************

skipping: [localhost] => (item={'name': 'custom-values-elasticsearch', 'file': 'custom-values-elasticsearch.yaml'})

skipping: [localhost] => (item={'name': 'custom-values-elasticsearch-curator', 'file': 'custom-values-elasticsearch-curator.yaml'})

TASK [common : Kubesphere | Check elasticsearch data StatefulSet] **************

skipping: [localhost]

TASK [common : Kubesphere | Check elasticsearch storageclass] ******************

skipping: [localhost]

TASK [common : Kubesphere | Comment elasticsearch storageclass parameter] ******

skipping: [localhost]

TASK [common : KubeSphere | Check internal es] *********************************

skipping: [localhost]

TASK [common : Kubesphere | Deploy elasticsearch-logging] **********************

skipping: [localhost]

TASK [common : Kubesphere | Get PersistentVolume Name] *************************

skipping: [localhost]

TASK [common : Kubesphere | Patch PersistentVolume (persistentVolumeReclaimPolicy)] ***

skipping: [localhost]

TASK [common : Kubesphere | Delete elasticsearch] ******************************

skipping: [localhost]

TASK [common : Kubesphere | Waiting for seconds] *******************************

skipping: [localhost]

TASK [common : Kubesphere | Deploy elasticsearch-logging] **********************

skipping: [localhost]

TASK [common : Kubesphere | import es status] **********************************

skipping: [localhost]

TASK [common : Kubesphere | Deploy elasticsearch-logging-curator] **************

skipping: [localhost]

TASK [common : Kubesphere | Getting elasticsearch and curator installation files] ***

skipping: [localhost]

TASK [common : Kubesphere | Creating custom manifests] *************************

skipping: [localhost] => (item={'path': 'fluentbit', 'file': 'custom-fluentbit-fluentBit.yaml'})

skipping: [localhost] => (item={'path': 'init', 'file': 'custom-fluentbit-operator-deployment.yaml'})

skipping: [localhost] => (item={'path': 'migrator', 'file': 'custom-migrator-job.yaml'})

TASK [common : Kubesphere | Checking fluentbit-version] ************************

skipping: [localhost]

TASK [common : Kubesphere | Backup old fluentbit crd] **************************

skipping: [localhost]

TASK [common : Kubesphere | Deleting old fluentbit operator] *******************

skipping: [localhost] => (item={'type': 'deploy', 'name': 'logging-fluentbit-operator'})

skipping: [localhost] => (item={'type': 'fluentbits.logging.kubesphere.io', 'name': 'fluent-bit'})

skipping: [localhost] => (item={'type': 'ds', 'name': 'fluent-bit'})

skipping: [localhost] => (item={'type': 'crd', 'name': 'fluentbits.logging.kubesphere.io'})

TASK [common : Kubesphere | Prepare fluentbit operator setup] ******************

skipping: [localhost]

TASK [common : Kubesphere | Migrate fluentbit operator old config] *************

skipping: [localhost]

TASK [common : Kubesphere | Deploy new fluentbit operator] *********************

skipping: [localhost]

TASK [common : Kubesphere | import fluentbit status] ***************************

skipping: [localhost]

TASK [common : Setting persistentVolumeReclaimPolicy (mysql)] ******************

skipping: [localhost]

TASK [common : Setting persistentVolumeReclaimPolicy (etcd)] *******************

skipping: [localhost]

PLAY RECAP *********************************************************************

localhost : ok=39 changed=34 unreachable=0 failed=0 skipped=118 rescued=0 ignored=10

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that

the implicit localhost does not match 'all'

PLAY [localhost] ***************************************************************

TASK [download : include_tasks] ************************************************

skipping: [localhost]

TASK [download : Download items] ***********************************************

skipping: [localhost]

TASK [download : Sync container] ***********************************************

skipping: [localhost]

TASK [kubesphere-defaults : Configure defaults] ********************************

ok: [localhost] => {

"msg": "Check roles/kubesphere-defaults/defaults/main.yml"

}

TASK [ks-core/prepare : KubeSphere | Check core components (1)] ****************

changed: [localhost]

TASK [ks-core/prepare : KubeSphere | Check core components (2)] ****************

changed: [localhost]

TASK [ks-core/prepare : KubeSphere | Check core components (3)] ****************

skipping: [localhost]

TASK [ks-core/prepare : KubeSphere | Check core components (4)] ****************

skipping: [localhost]

TASK [ks-core/prepare : KubeSphere | Update ks-core status] ********************

skipping: [localhost]

TASK [ks-core/prepare : set_fact] **********************************************

skipping: [localhost]

TASK [ks-core/prepare : KubeSphere | Create KubeSphere dir] ********************

ok: [localhost]

TASK [ks-core/prepare : KubeSphere | Getting installation init files] **********

changed: [localhost] => (item=ks-init)

TASK [ks-core/prepare : Kubesphere | Checking account init] ********************

changed: [localhost]

TASK [ks-core/prepare : Kubesphere | Init account] *****************************

changed: [localhost]

TASK [ks-core/prepare : KubeSphere | Init KubeSphere] **************************

changed: [localhost] => (item=iam-accounts.yaml)

changed: [localhost] => (item=webhook-secret.yaml)

changed: [localhost] => (item=users.iam.kubesphere.io.yaml)

TASK [ks-core/prepare : KubeSphere | Getting controls-system file] *************

changed: [localhost] => (item={'name': 'kubesphere-controls-system', 'file': 'kubesphere-controls-system.yaml'})

TASK [ks-core/prepare : KubeSphere | Installing controls-system] ***************

changed: [localhost]

TASK [ks-core/prepare : KubeSphere | Generate kubeconfig-admin] ****************

skipping: [localhost]

TASK [ks-core/init-token : KubeSphere | Create KubeSphere dir] *****************

ok: [localhost]

TASK [ks-core/init-token : KubeSphere | Getting installation init files] *******

changed: [localhost] => (item=jwt-script)

TASK [ks-core/init-token : KubeSphere | Creating KubeSphere Secret] ************

changed: [localhost]

TASK [ks-core/init-token : KubeSphere | Creating KubeSphere Secret] ************

ok: [localhost]

TASK [ks-core/init-token : KubeSphere | Creating KubeSphere Secret] ************

skipping: [localhost]

TASK [ks-core/init-token : KubeSphere | Enable Token Script] *******************

changed: [localhost]

TASK [ks-core/init-token : KubeSphere | Getting KS Token] **********************

changed: [localhost]

TASK [ks-core/init-token : Kubesphere | Checking kubesphere secrets] ***********

changed: [localhost]

TASK [ks-core/init-token : Kubesphere | Delete kubesphere secret] **************

skipping: [localhost]

TASK [ks-core/init-token : KubeSphere | Create components token] ***************

changed: [localhost]

TASK [ks-core/ks-core : KubeSphere | Getting kubernetes version] ***************

changed: [localhost]

TASK [ks-core/ks-core : KubeSphere | Setting kubernetes version] ***************

ok: [localhost]

TASK [ks-core/ks-core : KubeSphere | Getting kubernetes master num] ************

changed: [localhost]

TASK [ks-core/ks-core : KubeSphere | Setting master num] ***********************

ok: [localhost]

TASK [ks-core/ks-core : ks-console | Checking ks-console svc] ******************

changed: [localhost]

TASK [ks-core/ks-core : ks-console | Getting ks-console svc port] **************

skipping: [localhost]

TASK [ks-core/ks-core : ks-console | Setting console_port] *********************

skipping: [localhost]

TASK [ks-core/ks-core : KubeSphere | Getting Ingress installation files] *******

changed: [localhost] => (item=ingress)

changed: [localhost] => (item=ks-apiserver)

changed: [localhost] => (item=ks-console)

changed: [localhost] => (item=ks-controller-manager)

TASK [ks-core/ks-core : KubeSphere | Creating manifests] ***********************

changed: [localhost] => (item={'path': 'ingress', 'file': 'ingress-controller.yaml', 'type': 'config'})

changed: [localhost] => (item={'path': 'ks-apiserver', 'file': 'ks-apiserver.yml', 'type': 'deploy'})

changed: [localhost] => (item={'path': 'ks-controller-manager', 'file': 'ks-controller-manager.yaml', 'type': 'deploy'})

changed: [localhost] => (item={'path': 'ks-console', 'file': 'ks-console-config.yml', 'type': 'config'})

changed: [localhost] => (item={'path': 'ks-console', 'file': 'ks-console-deployment.yml', 'type': 'deploy'})

changed: [localhost] => (item={'path': 'ks-console', 'file': 'ks-console-svc.yml', 'type': 'svc'})

changed: [localhost] => (item={'path': 'ks-console', 'file': 'sample-bookinfo-configmap.yaml', 'type': 'config'})

TASK [ks-core/ks-core : KubeSphere | Delete Ingress-controller configmap] ******

fatal: [localhost]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/kubectl delete cm -n kubesphere-system ks-router-config\n", "delta": "0:00:05.811622", "end": "2021-03-17 14:29:28.261312", "msg": "non-zero return code", "rc": 1, "start": "2021-03-17 14:29:22.449690", "stderr": "Error from server (NotFound): configmaps \"ks-router-config\" not found", "stderr_lines": ["Error from server (NotFound): configmaps \"ks-router-config\" not found"], "stdout": "", "stdout_lines": []}

...ignoring

TASK [ks-core/ks-core : KubeSphere | Creating Ingress-controller configmap] ****

changed: [localhost]

TASK [ks-core/ks-core : KubeSphere | Creating ks-core] *************************

changed: [localhost] => (item={'path': 'ks-apiserver', 'file': 'ks-apiserver.yml'})

changed: [localhost] => (item={'path': 'ks-controller-manager', 'file': 'ks-controller-manager.yaml'})

changed: [localhost] => (item={'path': 'ks-console', 'file': 'ks-console-config.yml'})

changed: [localhost] => (item={'path': 'ks-console', 'file': 'sample-bookinfo-configmap.yaml'})

changed: [localhost] => (item={'path': 'ks-console', 'file': 'ks-console-deployment.yml'})

TASK [ks-core/ks-core : KubeSphere | Check ks-console svc] *********************

changed: [localhost]

TASK [ks-core/ks-core : KubeSphere | Creating ks-console svc] ******************

changed: [localhost] => (item={'path': 'ks-console', 'file': 'ks-console-svc.yml'})

TASK [ks-core/ks-core : KubeSphere | Patch ks-console svc] *********************

skipping: [localhost]

TASK [ks-core/ks-core : KubeSphere | import ks-core status] ********************

changed: [localhost]

PLAY RECAP *********************************************************************

localhost : ok=31 changed=25 unreachable=0 failed=0 skipped=13 rescued=0 ignored=1

Start installing monitoring

Start installing multicluster

**************************************************

task monitoring status is running

task multicluster status is successful

total: 2 completed:1

**************************************************

task monitoring status is running

task multicluster status is successful

total: 2 completed:1

**************************************************

task monitoring status is running

task multicluster status is successful

total: 2 completed:1

**************************************************

task monitoring status is running

task multicluster status is successful

total: 2 completed:1

**************************************************

task monitoring status is running

task multicluster status is successful

total: 2 completed:1

**************************************************

task monitoring status is running

task multicluster status is successful

total: 2 completed:1

**************************************************

task monitoring status is running

task multicluster status is successful

total: 2 completed:1

**************************************************

task monitoring status is running

task multicluster status is successful

total: 2 completed:1

**************************************************

task monitoring status is successful

task multicluster status is successful

total: 2 completed:2

**************************************************

#####################################################

### Welcome to KubeSphere! ###

#####################################################

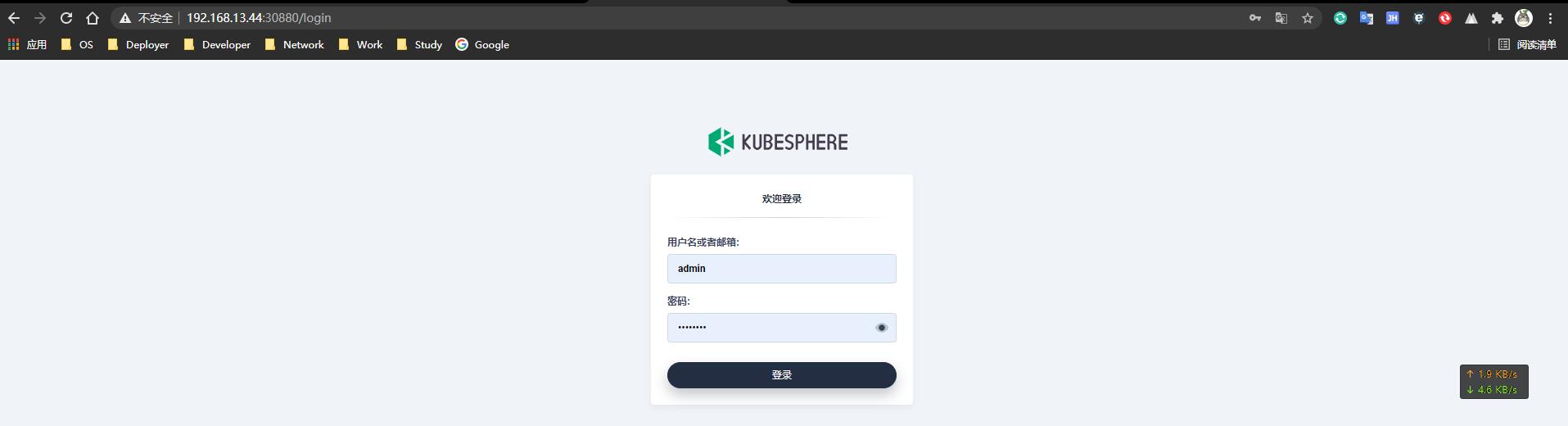

Console: http://192.168.13.44:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After logging into the console, please check the

monitoring status of service components in

the "Cluster Management". If any service is not

ready, please wait patiently until all components

are ready.

2. Please modify the default password after login.

#####################################################

https://kubesphere.io 2021-03-17 14:32:30

#####################################################

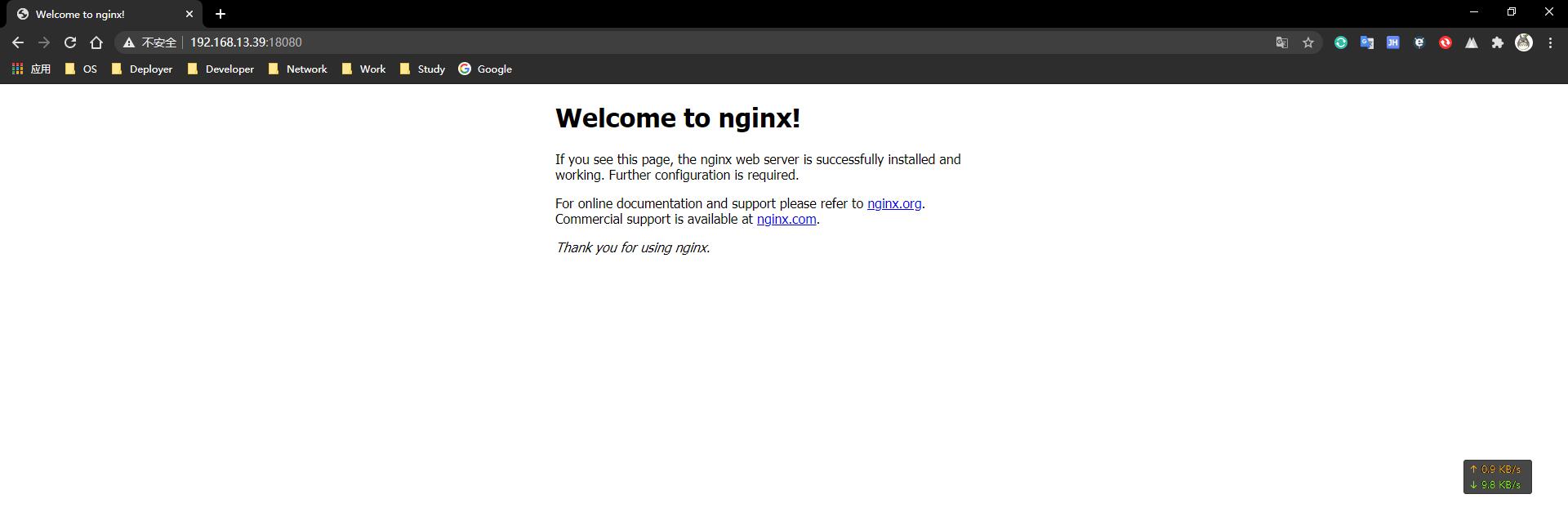

WEB访问

.jpg)